Build a Video Search and Summarization Agent with NVIDIA AI Blueprint

NVIDIA AI Blueprint for Video Search and Summarization

Introduction

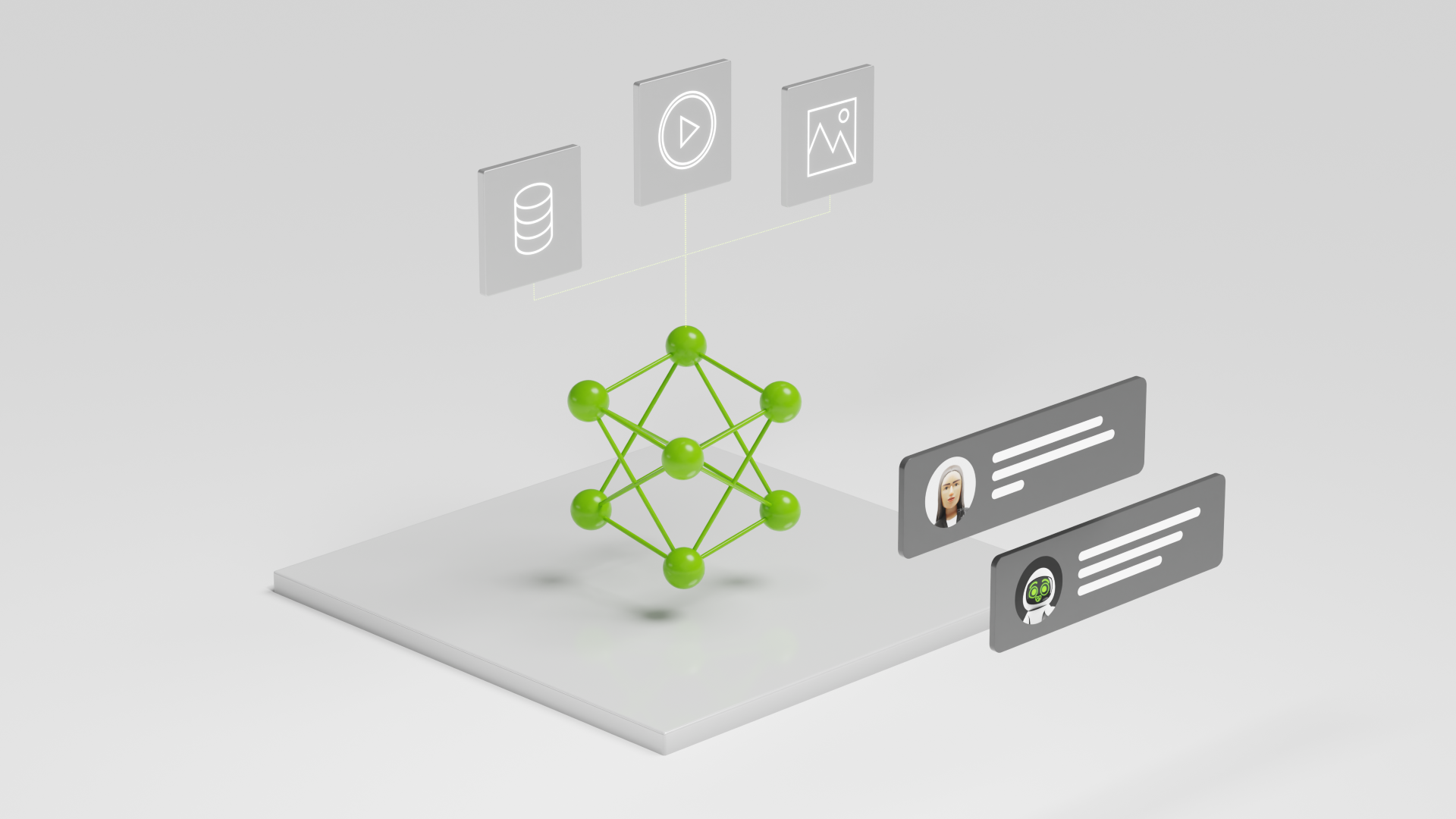

NVIDIA AI Blueprints introduce a reference workflow for long-form video understanding using VLMs, LLMs, and Graph-RAG techniques. This allows for the development of video analytics AI agents capable of tasks such as summarization, Q&A, and event detection on live streams.

Video Analytics AI Agent

The blueprint combines VLMs and LLMs with datastores to create a scalable and GPU-accelerated video understanding agent. The VLM pipeline decodes video chunks, generates embeddings, and uses a VLM to provide responses to user queries.

Video Ingestion

To summarize videos or answer questions, a comprehensive index capturing important information must be built. The VLMs and LLMs produce dense captions and metadata to create a knowledge graph. Long videos are divided into chunks, analyzed by VLMs for captions, then summarized and aggregated by the CA-RAG module.

VLM Pipeline

- Decodes video chunks

- Generates embeddings

- Uses VLM for per-chunk responses

Caption Summarization (LLM)

- LLM prompt used to combine VLM captions

- Graph-RAG techniques extract key insights for summarization, Q&A, and alerts

By leveraging these components, the AI agent can provide efficient video summarization, interactive Q&A, and customized alerts on live video streams.