F-VLM: Open-vocabulary object detection upon frozen vision and language models

F-VLM: Open-vocabulary Object Detection upon Frozen Vision and Language Models

Introduction

F-VLM is a framework for open-vocabulary object detection that aims to expand the limited set of annotated categories. It uses a frozen Vision and Language Model (VLM) as the detector backbone and a text encoder for caching detection text embeddings of offline dataset vocabulary. F-VLM outperforms state-of-the-art algorithms on the LVIS open-vocabulary detection benchmark and transfer object detection.

Methodology

The cosine similarity of region features and category text embeddings are used as detection scores. F-VLM uses the feature extractor for detector head training, which is the only step trained on standard detection data. The VLM backbone is used to inherit rich semantic knowledge and obtain VLM features for a region. The pooling layer, applied on the backbone output features, crops and resizes the region features with the ROI-Align layer to get fixed-size inputs.

Results

F-VLM achieves state-of-the-art on the LVIS benchmark and very competitive transfer detection on other datasets. F-VLM is efficient because the backbone runs in inference mode during training, saving training time and memory. F-VLM has potential for substantial memory savings at training time and runs almost as fast as a standard detector at inference time.

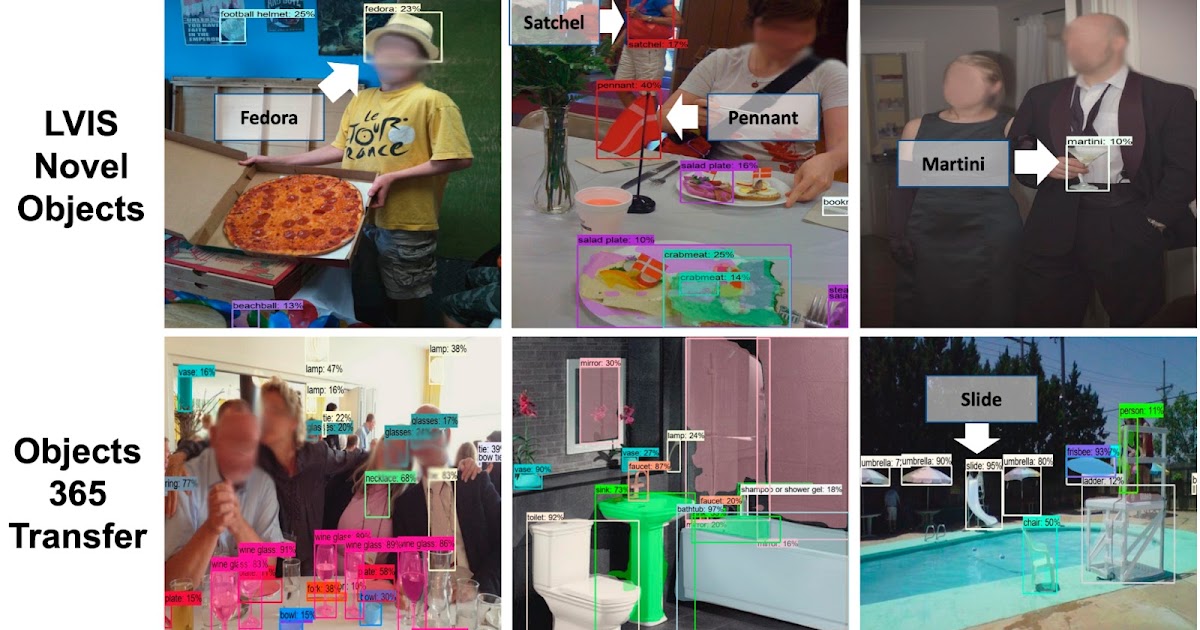

Visualization

F-VLM is visualized through open-vocabulary detection on LVIS and transfer detection on Objects365 dataset. The results show accurate detection of many categories.

Conclusion

F-VLM is a fast and efficient framework for open-vocabulary object detection that retains rich semantic knowledge from the frozen VLM backbone and achieves state-of-the-art performance.