Generate Stunning Images with Stable Diffusion XL on the NVIDIA AI Inference Platform

Table of Contents

- Challenges in Deploying Stable Diffusion XL (SDXL) in Production

- How NVIDIA AI Inference Platform Can Help

- Let’s Enhance: Harnessing the Power of NVIDIA AI Inference Platform

- Enhancing Product Images with SDXL on Google Cloud and NVIDIA AI Inference Platform

1. Challenges in Deploying Stable Diffusion XL (SDXL) in Production

- Enterprises face challenges when deploying SDXL in production.

- SDXL image output may require further post-processing.

- Automation of AI pipelines is needed for streamlined processing.

- Complexity arises from latency minimization and throughput enhancement.

2. How NVIDIA AI Inference Platform Can Help

- NVIDIA L4 Tensor Core GPUs provide efficient AI acceleration.

- Utilize NVIDIA TensorRT for optimized, low-latency inference.

- NVIDIA Triton Inference Server enables smooth deployment and management.

- Model Ensembles feature for seamless model chaining and workflow optimization.

3. Let’s Enhance: Harnessing the Power of NVIDIA AI Inference Platform

- Let’s Enhance uses Triton Inference Server to deploy AI models on NVIDIA GPUs.

- Experience in deploying and serving over 30 AI models for 3+ years.

- Leveraging the power of NVIDIA AI Inference Platform for innovative AI solutions.

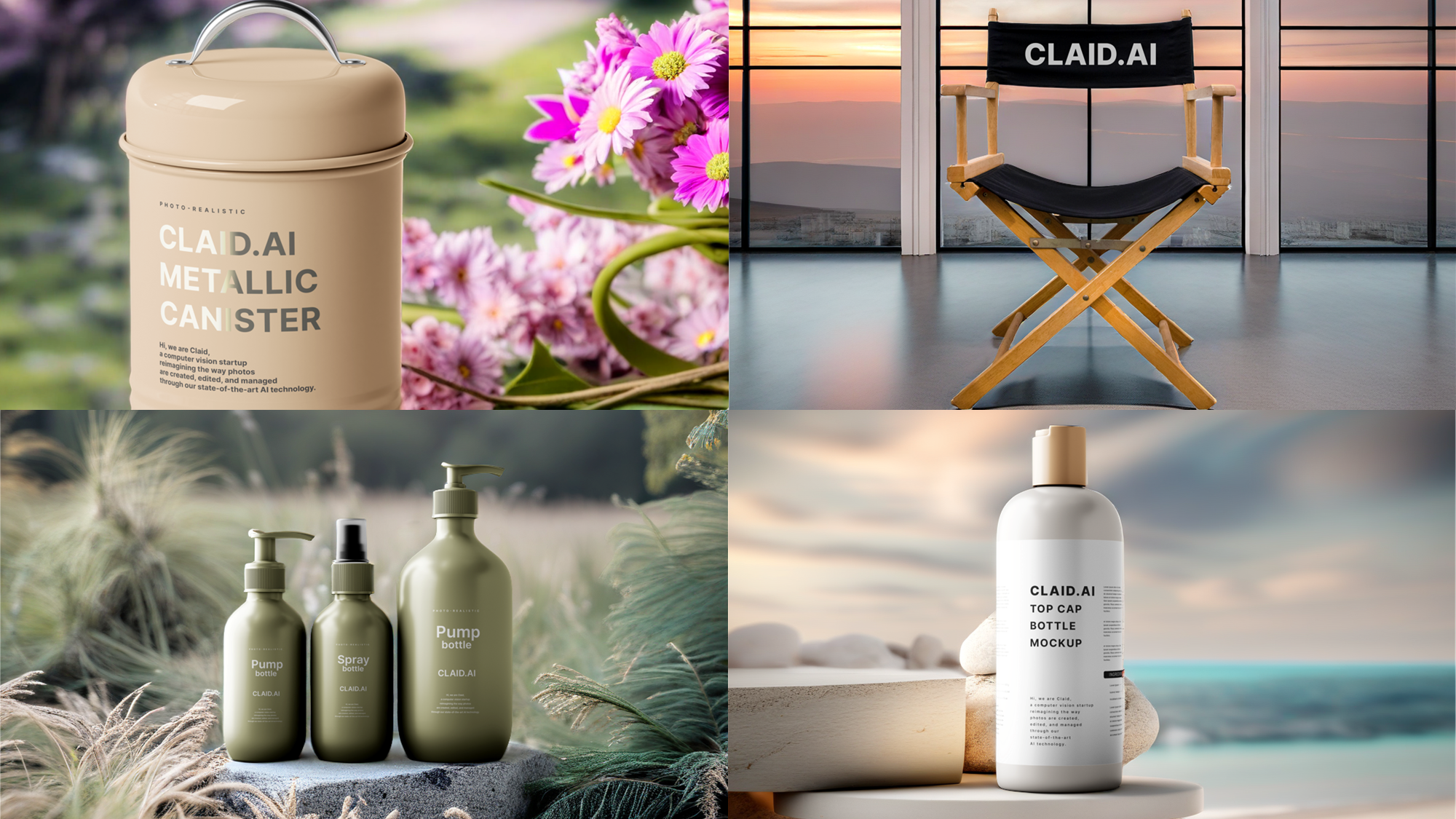

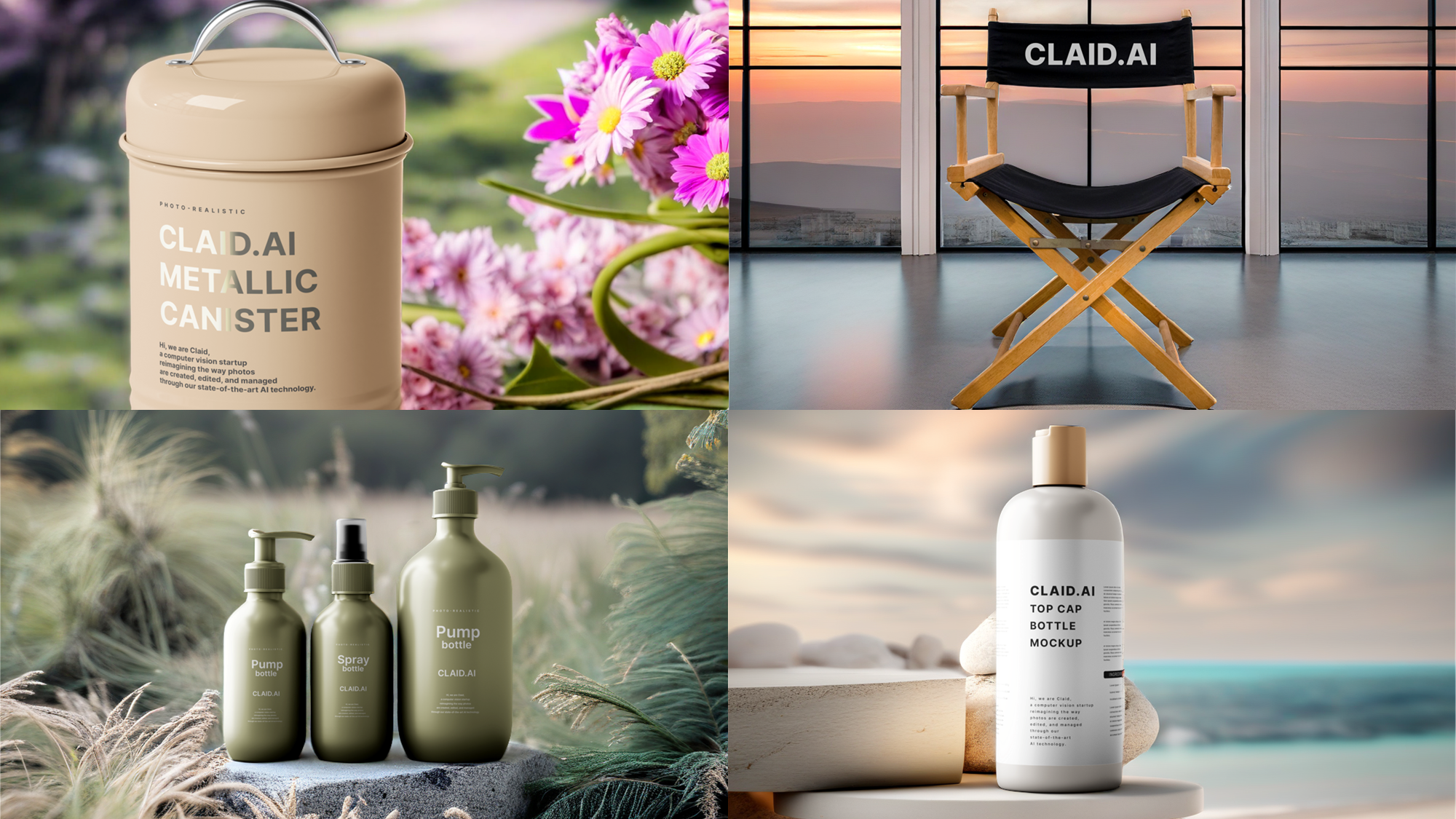

4. Enhancing Product Images with SDXL on Google Cloud and NVIDIA AI Inference Platform

- Use Google Cloud’s G2 instances powered by NVIDIA L4 GPUs for SDXL.

- Deploy TensorRT-optimized SDXL for best price performance.

- Follow steps to generate high-quality images with Stable Diffusion XL.