Inside NVIDIA Blackwell Ultra: The Chip Powering the AI Factory Era

Table of Contents

- Introduction

- NVIDIA Blackwell Ultra Architecture

- Tensor Cores and Transformer Engine

- Memory Capacity and Bandwidth

- Performance-Efficiency Advancements

- Interactive Pareto Frontier Explainer

Introduction

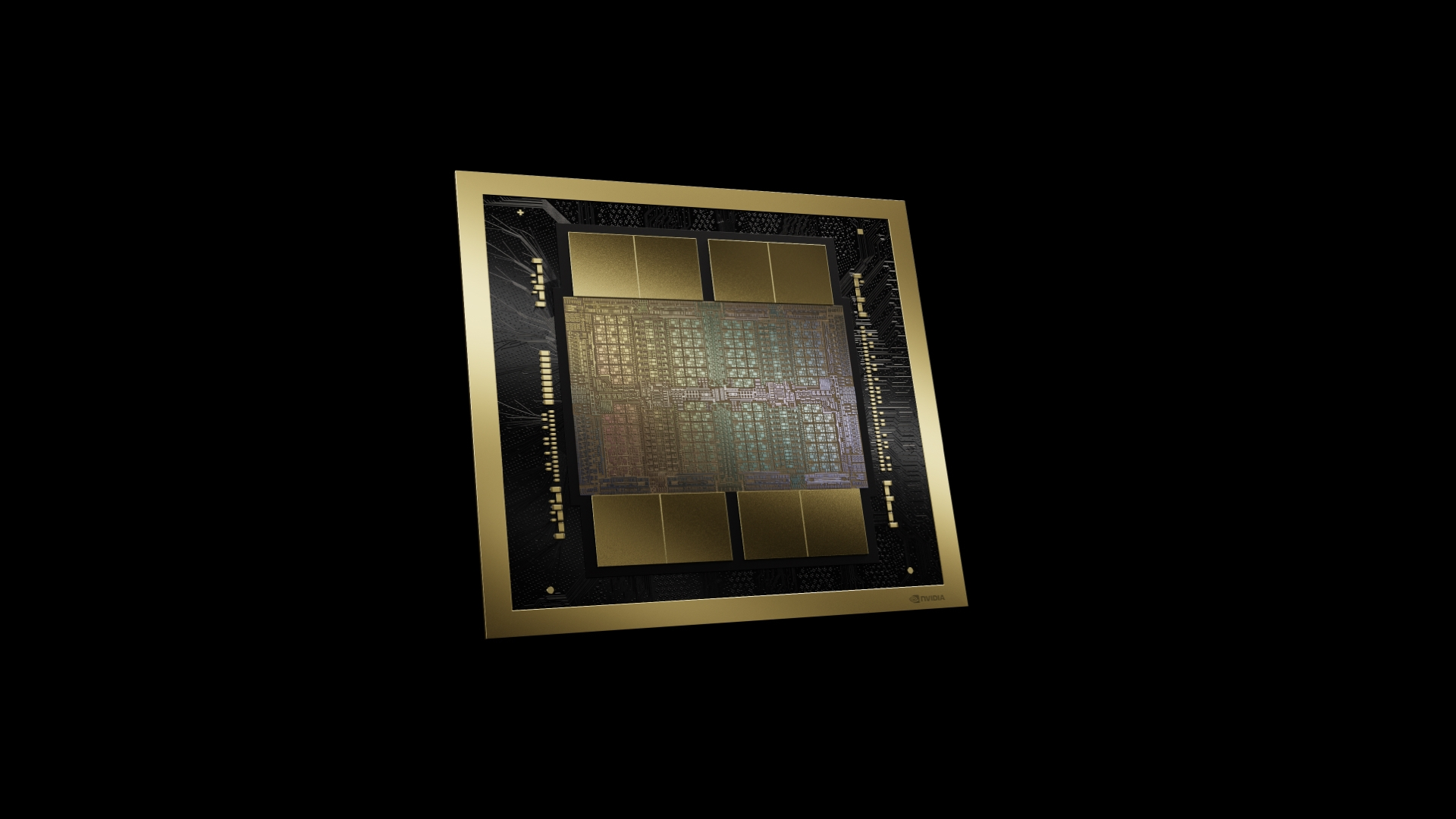

The NVIDIA Blackwell Ultra GPU is the latest addition to the NVIDIA Blackwell architecture family, designed to accelerate training and AI reasoning in AI factories. Manufactured using TSMC 4NP with 208B transistors, the Blackwell Ultra GPU offers enhanced performance, scalability, and efficiency for real-time AI services.

NVIDIA Blackwell Ultra Architecture

Blackwell Ultra leverages silicon innovations and system-level integration to provide next-level performance. It features 4 fifth-generation Tensor Cores with a second-generation Transformer Engine, optimized for various precision formats including FP8, FP6, and NVFP4. Each Streaming Multiprocessor (SM) contains four Tensor Cores, totaling 640 Tensor Cores across 160 SMs in Blackwell Ultra.

Tensor Cores and Transformer Engine

The new Tensor Cores in Blackwell Ultra are optimized for NVFP4 precision format, offering higher throughput and lower latency for dense and sparse AI workloads. With 256 KB of Tensor Memory (TMEM) per SM and support for dual-thread-block MMA, the Tensor Cores deliver nearly FP8-equivalent accuracy while reducing memory footprint significantly.

Memory Capacity and Bandwidth

Blackwell Ultra scales memory capacity with 288 GB of HBM3e per GPU, providing 3.6x more on-package memory than H100. The high bandwidth memory features 8 stacks with 16 × 512-bit controllers, offering 8 TB/s per GPU for improved performance.

Performance-Efficiency Advancements

Blackwell Ultra boosts compute and memory capacity by 50% compared to Blackwell, allowing for larger models and faster throughput without compromising efficiency. It delivers 15 petaFLOPS of NVFP4 performance, a 1.5x increase from the Blackwell GPU.

Interactive Pareto Frontier Explainer

To understand how hardware innovations impact data center efficiency and user experience, NVIDIA offers an interactive Pareto Frontier explainer. This tool showcases the benefits of hardware and deployment configurations in AI factories.

Summary

The NVIDIA Blackwell Ultra GPU introduces advancements in architecture, Tensor Cores, Transformer Engine, memory capacity, and performance-efficiency compared to its predecessors. With enhanced compute power, memory capacity, and efficiency, Blackwell Ultra is designed to meet the demands of AI factories and real-time AI services.