Optimizing Inference on Large Language Models with NVIDIA TensorRT-LLM, Now Publicly Available

Optimizing Inference on Large Language Models with NVIDIA TensorRT-LLM

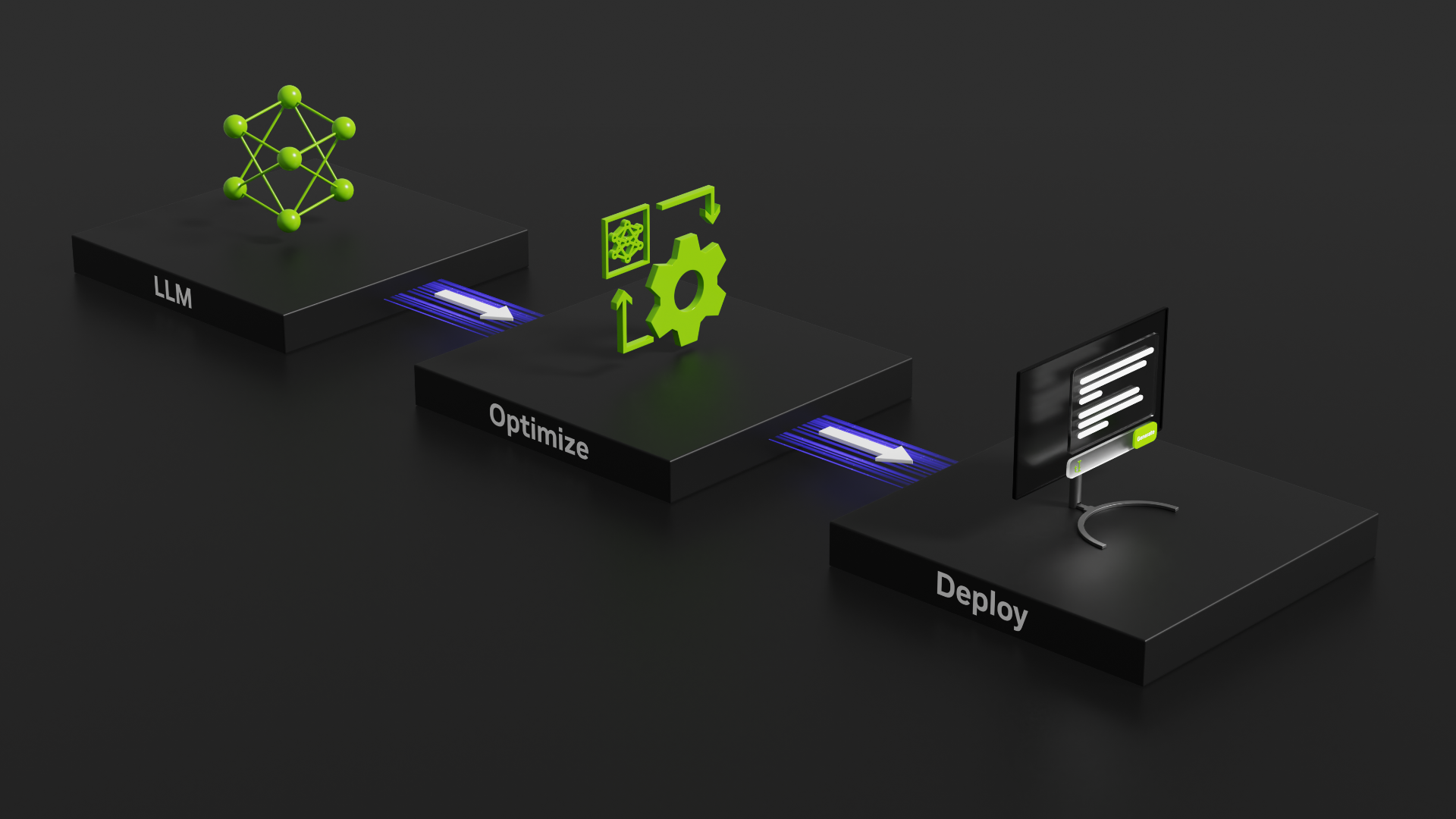

The NVIDIA TensorRT-LLM library, now publicly available, offers optimized inference for large language models (LLMs). This open-source library, part of the NVIDIA NeMo framework, provides a Python API for defining and building new models, incorporating various optimizations for improved performance.

Highlights of TensorRT-LLM include support for popular LLMs such as Llama 1 and 2, ChatGLM, Falcon, MPT, Baichuan, and Starcoder. It also offers features like in-flight batching, paged attention, and multi-GPU multi-node (MGMN) inference. Furthermore, it provides support for NVIDIA Ampere architecture, NVIDIA Ada Lovelace architecture, and NVIDIA Hopper GPUs, along with native Windows support (beta).

Over the past two years, NVIDIA has collaborated with leading LLM companies to accelerate and optimize LLM inference. TensorRT-LLM has been used by companies like Anyscale, Baichuan, Grammarly, Meta, and Tabnine to enhance their LLM models.

To illustrate how to use TensorRT-LLM, here's an example of deploying Llama 2, a popular LLM, with TensorRT-LLM and NVIDIA Triton on Linux.

Getting Started

To use TensorRT-LLM, you'll need a set of trained weights. You can either use your own weights trained in NVIDIA NeMo or obtain pretrained weights from repositories like HuggingFace Hub. Additionally, you'll need a model definition written in the TensorRT-LLM Python API.

Building the Model

TensorRT-LLM allows you to build a graph of operations using NVIDIA TensorRT primitives to define the layers of your neural network. This example showcases some of the optimizations available in TensorRT-LLM.

Running the Model Locally

Once you have the model engine, you can execute it locally using the TensorRT-LLM runtime backend. This allows you to perform inference on your model directly from your system.

Deploying with Triton Inference Server

To create a production-ready deployment of your LLM, you can use the NVIDIA Triton Inference Server. A new Triton Inference Server backend for TensorRT-LLM leverages the TensorRT-LLM C++ runtime for efficient inference execution. It includes techniques like in-flight batching and paged KV-caching.

To deploy the model with Triton Inference Server, you need to build the backend, create a model repository, and modify the configuration files with the necessary information. Finally, you can launch the Triton server using the Docker container.

By utilizing TensorRT-LLM and Triton Inference Server, you can optimize and deploy your LLM models for efficient inference, catering to multiple users simultaneously.