Protecting Sensitive Data and AI Models with Confidential Computing

Table of Contents

- Introduction

- Secure AI on NVIDIA H100

- Unlocking secure AI: Use cases empowered by confidential computing on NVIDIA H100

- Confidential AI training

- Confidential AI inference

- AI IP protection for ISVs and enterprises

- Confidential federated learning

- NVIDIA platforms for accelerated confidential computing on-premises

Introduction

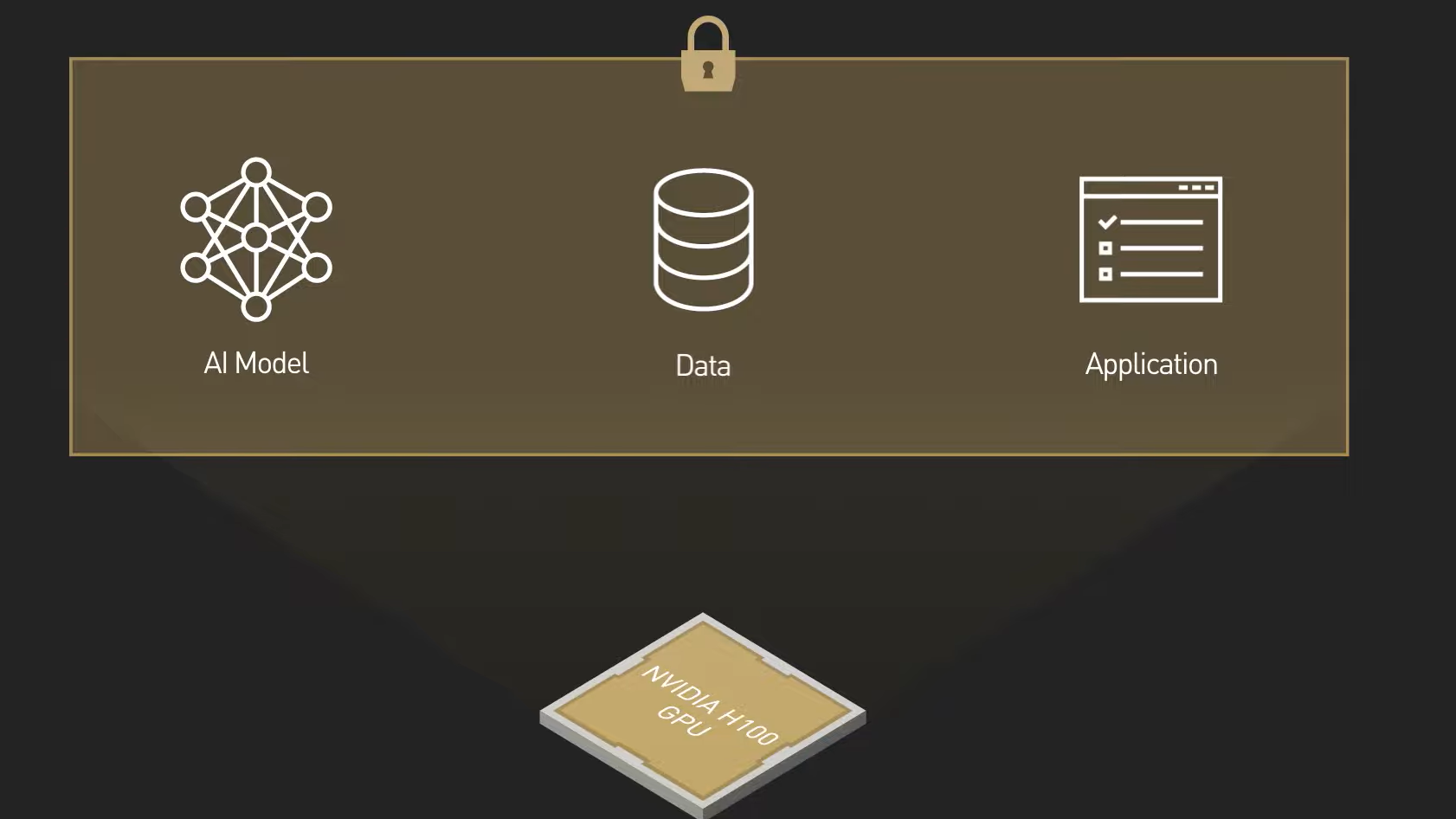

Confidential computing is a hardware security feature that protects sensitive data and AI models. The technology creates a Trusted Execution Environment (TEE) that blocks access to data and code from anyone other than the user. This technology provides an extra layer of security to companies processing sensitive data or intellectual property.

Secure AI on NVIDIA H100

Secure AI is a built-in hardware-based security feature found in the NVIDIA H100 Tensor Core GPU. Secure AI enables regulated industries such as healthcare, finance, and the public sector to safeguard the confidentiality and integrity of their sensitive data and AI models. This feature has security measures built into the GPU architecture, providing businesses with peace of mind when deploying AI applications on-premises, in the cloud, or at the edge.

Unlocking secure AI: Use cases empowered by confidential computing on NVIDIA H100

NVIDIA H100 GPUs offer performant, versatile, scalable, and secure AI workloads. Here are some use cases:

Confidential AI training

Industries that handle sensitive data, such as healthcare and finance, require protection and regulation. Confidential computing on NVIDIA H100 GPUs provides this protection by keeping data and AI models confidential during the training process. This technology ensures regulatory compliance and protects businesses from unauthorized access.

Confidential AI inference

End-users who provide inputs to a deployed AI model require data protection for privacy and regulatory compliance. NVIDIA H100 GPUs with confidential computing protect both the customer input data and AI models from viewing or modification during inference. This provides an added layer of trust for end-users to adopt AI-enabled services, and enterprises can be confident that their valuable AI models are protected during use.

AI IP protection for ISVs and enterprises

ISVs must protect their intellectual property when deploying applications on-premises or in remote locations. Confidential computing on NVIDIA H100 GPUs enables ISVs to protect their valuable IPs from unauthorized access or modifications, even from individuals with physical access to the deployment infrastructure.

Confidential federated learning

Building and improving AI models for use cases such as fraud detection, medical imaging, and drug development requires diverse datasets for training. Confidential federated learning with NVIDIA H100 provides additional security by ensuring that data and local AI models are protected from unauthorized access at each participating site. The technology protects global AI models during the aggregation process and guarantees that the aggregated AI model is secured from unauthorized access.

NVIDIA platforms for accelerated confidential computing on-premises

To start with confidential computing on NVIDIA H100 GPU requires a virtual machine (VM)-based TEE technology supported by the CPU such as AMD SEV-SNP and Intel TDX.